Web Hack 原理

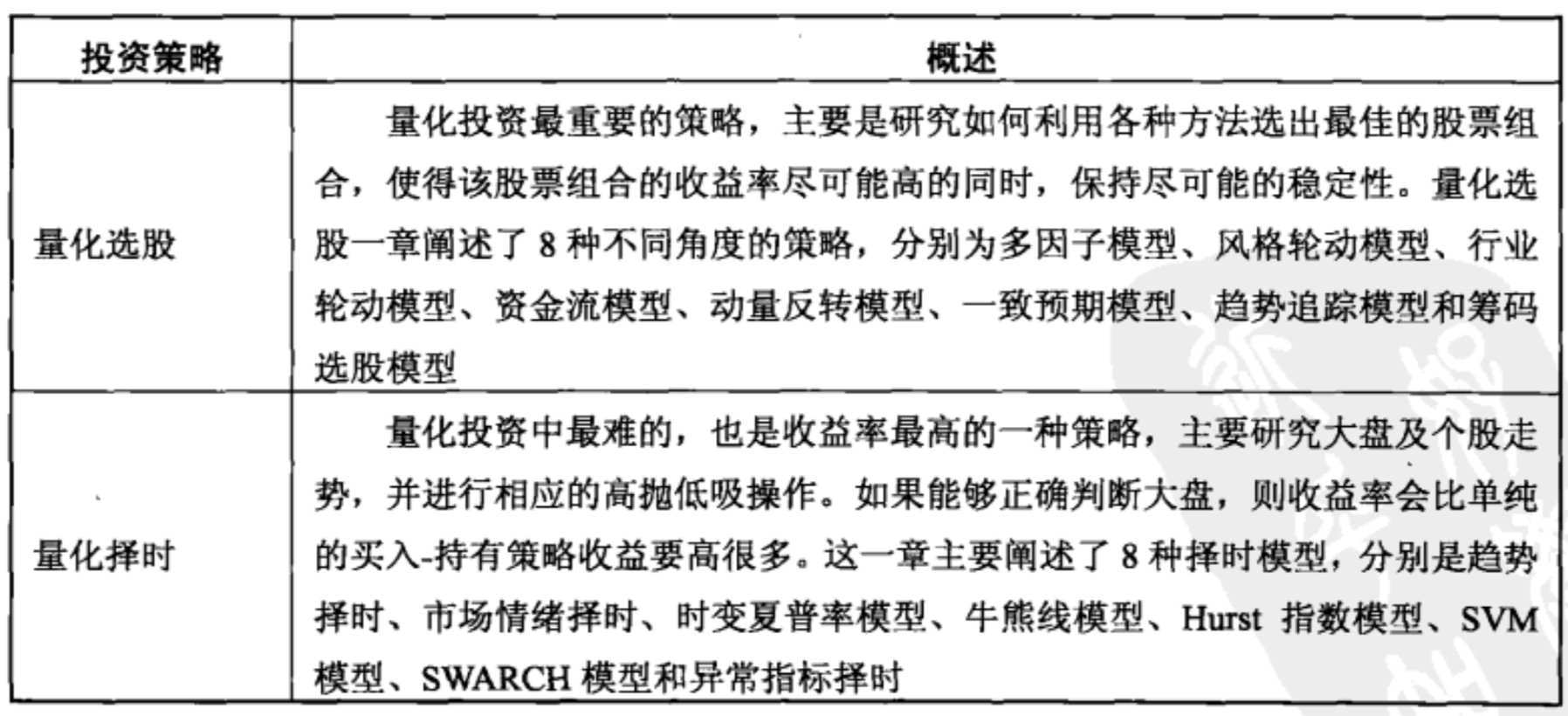

量化择时

判断大势涨、跌、盘整

衡量指标

收益

选股

选择股票组合

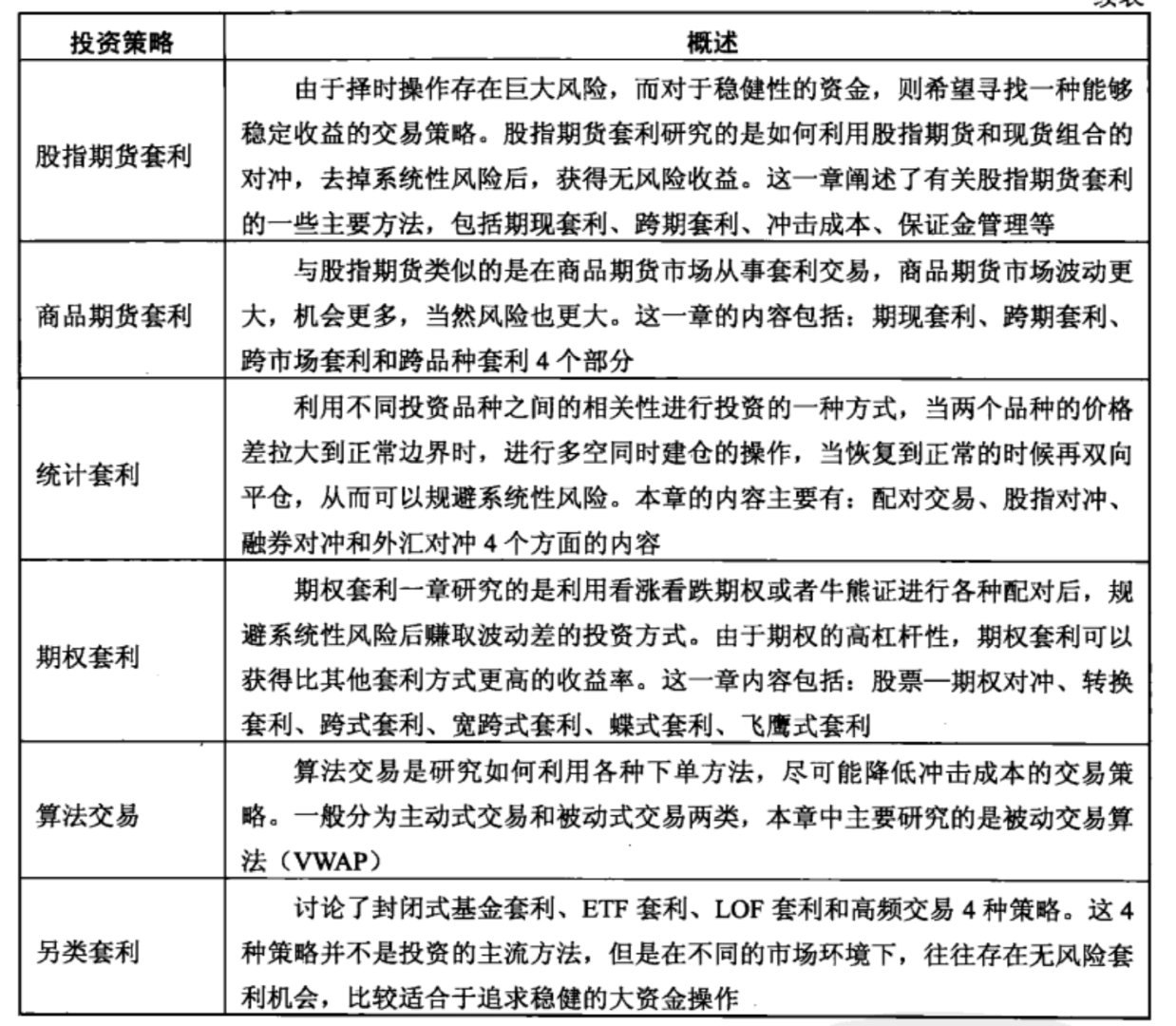

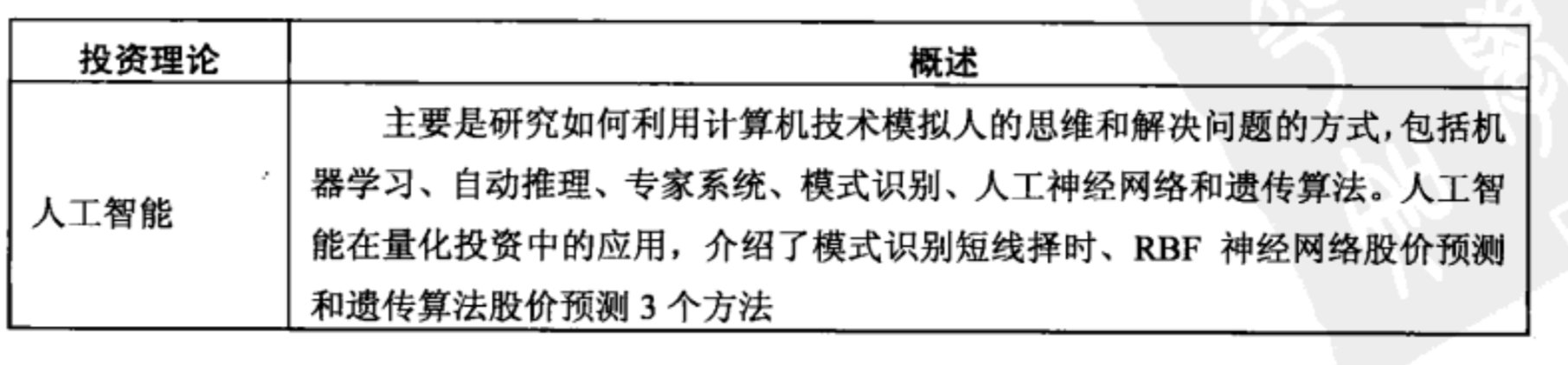

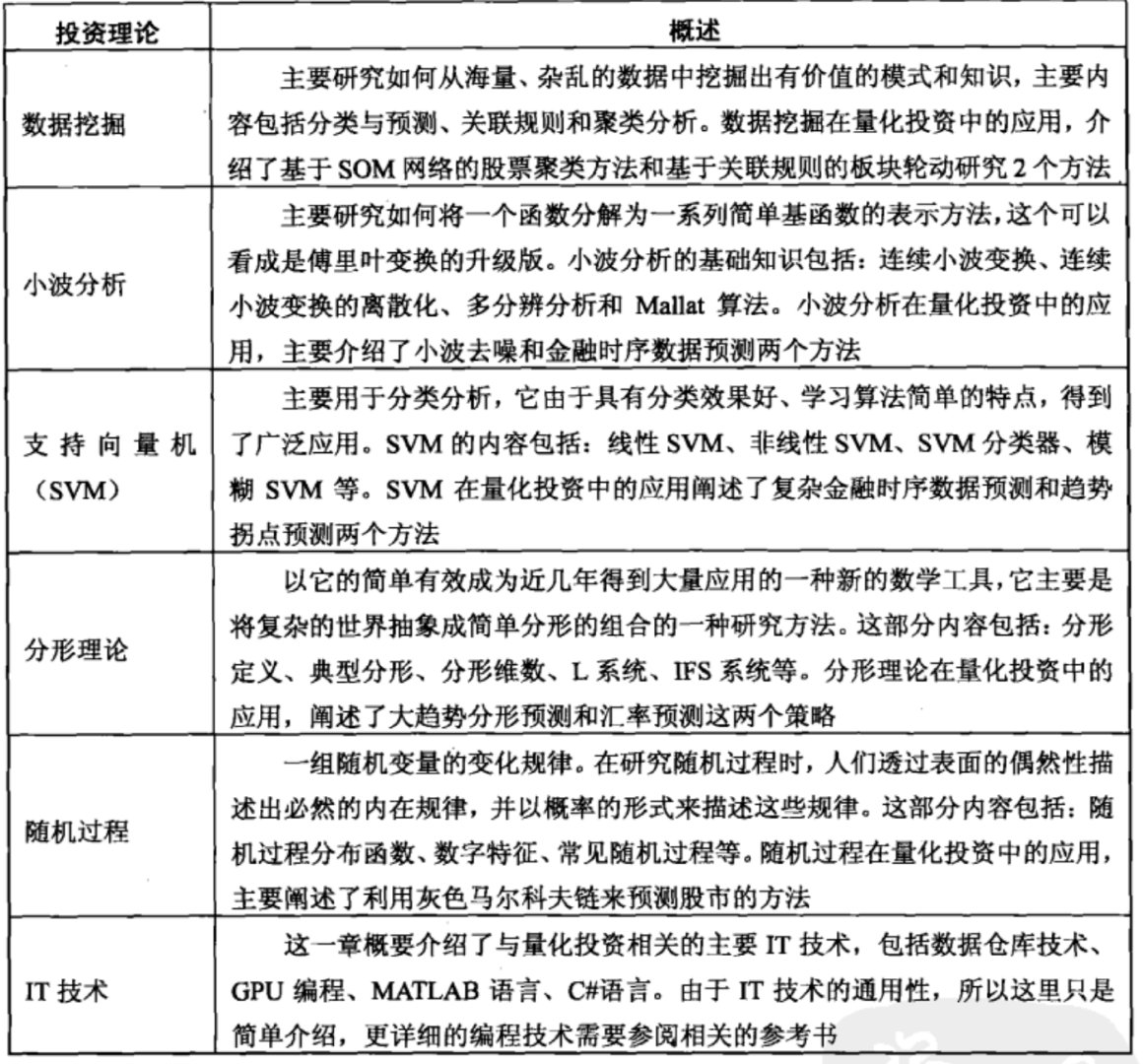

概要

抽奖问题

| 奖项 | 中奖人数 or 权重 |

|---|---|

| 1 | 500 |

| 5 | 100 |

| 10 | 20 |

| 50 | 5 |

| 100 | 1 |

区块tx解析

区块链交易数据解析

区块共识

分布式系统由于引入了多个节点,所以系统中会出现各种非常复杂的情况;随着节点数量的增加,节点失效、故障或者宕机就变成了一件非常常见的事情,解决分布式系统中的各种边界条件和意外情况也增加了解决分布式一致性问题的难度。 节点之间的网络通信收到干扰甚至阻断以及分布式系统的运行速度的差异都是解决分布式系统一致性所面临的难题。

Python 部署

部署脚本备用